ChatGPT at USF: What do professors think?

USF history professor Michael Decker follows a strict no-artificial intelligence (AI) policy in his classroom.

The one time his students were exposed to AI in the classroom was to critique AI-generated responses to their midterm exam prompt.

“I wanted them to think critically about the strengths and weaknesses of these machine-generated responses and to judge it as if they were in my shoes and grading such a submission,” Decker said.

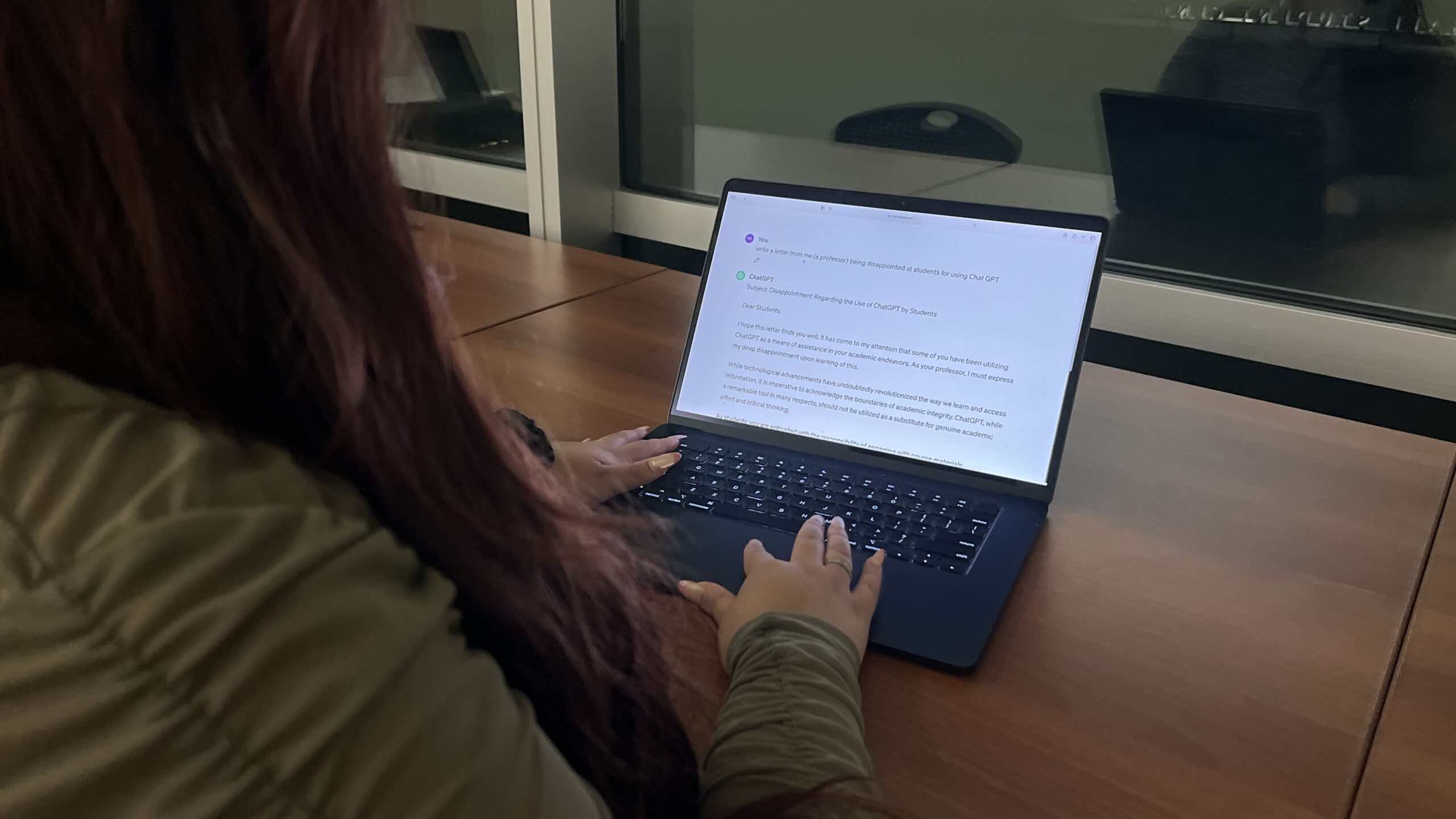

AI has made its mark on college education – though mostly for its notorious use as a cheat code to assignments.

With its rise, USF faculty members must assess the protocol to determine whether or not ChatGPT and other generative AI usage can be considered an academic violation.

The current USF policy on the use of AI in classrooms is under the jurisdiction of the instructor. This rule was established under the guiding principles developed by USF’s Generative AI Strategy Development team last year.

Some of these principles include awareness of bias, transparency and data protection.

Tim Henkel, director of the Center for Innovative Teaching and Learning, contributed to the guiding principles for AI at USF.

As a professor, Henkel implements these principles while teaching his own graduate course on ecological statistics.

The course involves learning coding to perform analysis and creating interpretation. Generative AI was useful in allowing the students to solve problems, according to Henkel.

“I encouraged it and didn’t worry about combating it,” Henkel said. “We had regular in-class activities for students to code with genAI tools.”

Henkel said students were discouraged from blindly copying the output of an AI after asking a problem. He said it would be a “recipe for disaster.”

Teaching students about the misleading nature of softwares such as ChatGPT will prevent them from using AI, according to Decker.

“Never has there been a more important time for developing critical thinking skills,” Decker said. “These tools misremember information… they should never replace human thinking and discernment.”

He said generative AI software is trained merely to integrate and eject information from only a few scholarly sources, which can skew a student’s submission.

“They’re only getting part of the story,” Decker said.

AI will only become more prominent as society progresses deeper into the digital age, so it should no longer be viewed as an adversary, but as an ally, according to Decker.

He said the tools have the potential to amplify a student’s learning experience, and should not be completely sidelined if precautions are taken. For example, students can use ChatGPT to create an outline for a paper, construct a preliminary position or just organize their ideas, Decker said.

Because AI is here to stay, the university should work to accommodate this “seismic shift,” according to Decker.

“The key selling point with any piece of this is to persuade students to take ownership of their learning, not just their education,” Decker said. “We have to find a way to productively adapt and adopt to best serve the needs of our students.”

However, he said proceeding with caution is necessary to avoid misuse of such tools.

“Harnessing its power in the service of learning, rather than replacing learning, comprises the greatest challenge,” Decker said.